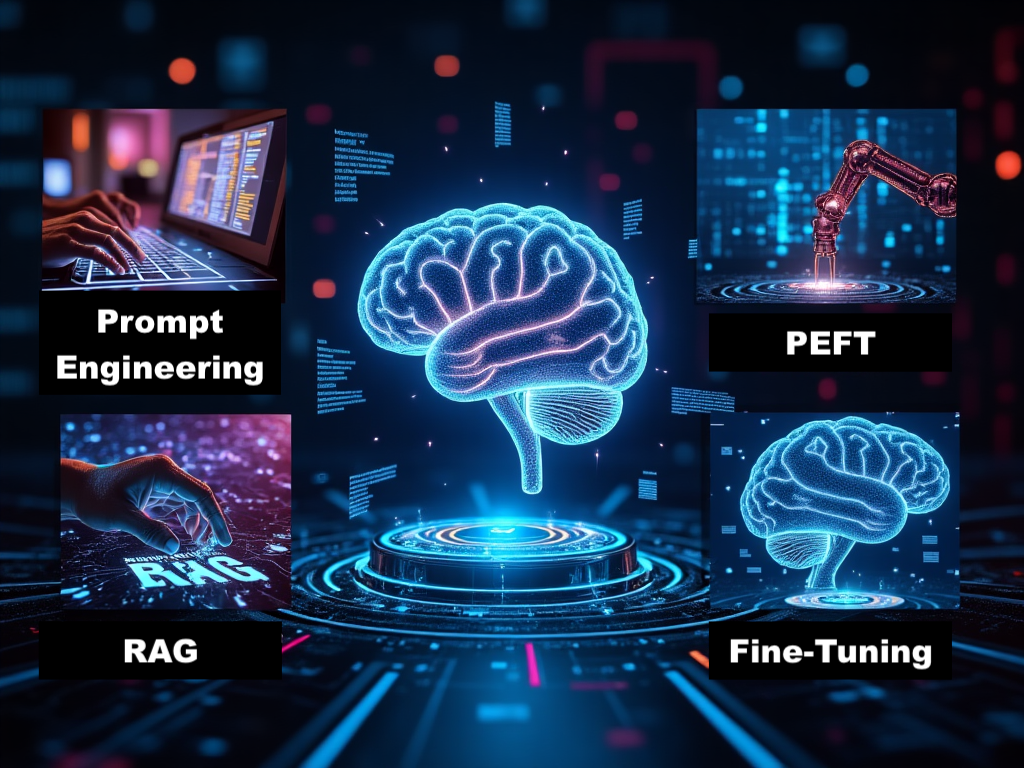

Parameter-Efficient Fine-Tuning or PEFT is a more efficient approach to adapting large language models (LLMs) compared to traditional full fine-tuning. Instead of modifying the entire model, PEFT focuses on fine-tuning only a small subset of the model’s parameters, making it less resource-intensive. This allows for faster adaptation to specific tasks while maintaining most of the pre-trained knowledge of the model, offering a cost-effective solution for improving performance on specialized tasks.

Read More