Prompt Engineering or Prompting is the process of structuring or crafting an instruction or prompt in order to produce the best possible output from a generative artificial intelligence (AI) model. A prompt is natural language text describing the task that an AI should perform. A prompt for a text-to-text language model can be a query, a command, or a longer statement including context, instructions, and conversation history. (Wikipedia).

This is Part 2 of my series in LLM Customization. In this post, we will look at Prompting. It is the most fundamental LLM customization technique and is simple, low-cost, and requires no model modifications.

There are a few common prompting techniques:

- Zero-Shot Prompting – Asking the LLM to answer without prior examples.

- Few-Shot Prompting – Providing a few examples in the prompt to improve accuracy.

- Chain-of-Thought (CoT) Prompting – Encouraging step-by-step reasoning to enhance complex problem-solving.

- Meta Prompting – Guide the reasoning process by introducing structure, constraints, or multi-step instructions.

- Self-Consistency Prompting – Generate multiple solutions and select the most frequently appearing answer.

- Tree of Thought (ToT) Prompting – Exploring multiple reasoning paths before selecting an answer.

- Prompt Chaining – Not exactly a prompting technique, it is using the output of the previous prompt as input to the next prompt.

Let’s go through each one and look at usable examples.

Zero-Shot Prompting

Zero-Shot Prompting is simply directly asking the LLM to answer without prior examples. And it works because of it’s vast knowledge and inference capabilities.

For example:

Prompt:

Q: Is 1 odd or even?

A:

Response:

Odd ✅

Or

Prompt:

Q: Is 2 odd or even?

A:

Response:

Even ✅

However, it fails if it doesn’t have the necessary knowledge or is unable to make an inference.

Prompt:

grok 1

Response:

Could you clarify the pattern or context for “grok 1”? Are you referring to a specific sequence, rule, or analogy? ❌

In this case, we can use few-shot prompting.

Few-Shot Prompting

Few-Shot Prompting is providing a few examples (shots) in the prompt to improve accuracy.

For example:

Prompt:

Q: grok 1

A: 1

Q: grok 2

A: 4

Q: grok 3

A: 9

Q: grok 4

A:

Response:

16 ✅

In this case, it worked. However, it may fail for more complex problems.

Chain-of-thought (CoT) Prompting

CoT is generating a a series of intermediate reasoning steps– a chain of thought — to significantly improve the ability of LLMs to perform complex reasoning (Wei et al. 2022).

Let’s say you have a more complex problem:

Prompt:

Q: A juggler can juggle 16 balls. Half of the balls are golf balls, and half of the golf balls are blue. How many blue golf balls are there?

Response:

8 ❌

You give an example or two (few-shot CoT) on the step-by-step solution:

Prompt:

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis

balls. Each can has 3 tennis balls. How many tennis balls does

he have now?

A: Roger started with 5 balls. 2 cans of 3 tennis balls each is 6

tennis balls. 5 + 6 = 11. The answer is 11.

Q: A juggler can juggle 16 balls. Half of the balls are golf balls,

and half of the golf balls are blue. How many blue golf balls are

there?

A:

Response: The juggler can juggle 16 balls. Half of the balls are golf

balls. So there are 16 / 2 = 8 golf balls. Half of the golf balls are

blue. So there are 8 / 2 = 4 blue golf balls. The answer is 4. ✅

You can also do zero-shot CoT by simply adding “Let’s think step by step” to the prompt as shown in Kojima et al. (2022):

Prompt:

Q: A juggler can juggle 16 balls. Half of the balls are golf balls, and half of the golf balls are blue. How many blue golf balls are there?

A: Let’s think step by step.

Response:

There are 16 balls in total. Half of the balls are golf balls. That means that there are 8 golf balls. Half of the golf balls are blue. That means that there are 4 blue golf balls. ?

Meta Prompting

Meta Prompting, introduced in Zhang et al. (2024), prioritizes structural and syntactical considerations over traditional content-centric methods like Few-Shot and CoT.

For example, for a math problem you would do the following:

Prompt:

Problem Statement:

• Problem: [question to be answered]

Solution Structure:

1. Begin the response with ”Let’s think step by step.”

2. Follow with the reasoning steps, ensuring the solution process is broken down clearly

and logically.

3. End the solution with the final answer encapsulated in a LaTeX-formatted box,... ,

for clarity and emphasis.

4. Finally, state ”The answer is [final answer to the problem].”, with the final answer

presented in LaTeX notation. ———-

Response:

...

Self-Consistency Prompting

Self-consistency prompting is an advanced prompting technique that first samples a diverse set of reasoning paths instead of only taking the greedy (most probable) one, and then selects the most consistent answer (Wang et al., 2022). This is possible because of the temperature hypeparameter. This allows the model to be more deterministic or more probabilistic.

Here’s code that does this. It is also available in the GitHub repo.

import json

import os

import re

from collections import Counter

from dotenv import load_dotenv

import google.generativeai as genai

# Gemini API Key

load_dotenv()

GOOGLE_API_KEY = os.getenv("GOOGLE_API_KEY")

if not GOOGLE_API_KEY:

raise ValueError("Missing API key! Set GOOGLE_API_KEY in your .env file.")

genai.configure(api_key=os.environ.get("GOOGLE_API_KEY"))

def clean_json_response(text):

# Try to extract JSON content between backticks if present

json_pattern = r'```(?:json)?\s*([\s\S]*?)\s*```'

match = re.search(json_pattern, text)

if match:

return match.group(1).strip()

# If no backticks found, try to find JSON object between { and }

json_pattern = r'(\{[\s\S]*\})'

match = re.search(json_pattern, text)

if match:

return match.group(1).strip()

# Otherwise return the original text

return text.strip()

def generate_responses(user_prompt, model="gemini-2.0-flash-001", num_samples=3, temperature=0.7):

model = genai.GenerativeModel(model)

responses = []

system_prompt = """

You are an expert math tutor. Always explain step-by-step solutions in a clear and structured manner.

Your response MUST be valid JSON without any text before or after the JSON object.

Do not include markdown formatting, code blocks, or any other text.

Format your response exactly like this:

{

"solution": [

"Step 1: [explanation]",

"Step 2: [explanation]",

...

],

"answer": "Final numerical result"

}

"""

prompt = system_prompt + "\n\n" + user_prompt

for _ in range(num_samples):

try:

response = model.generate_content(

[{"role": "user", "parts": [prompt]}],

generation_config=genai.types.GenerationConfig(temperature=temperature, max_output_tokens=1000)

)

response_text = clean_json_response(response.text)

json_data = json.loads(response_text) # Check if the response is valid JSON

responses.append(json_data)

except json.JSONDecodeError as e:

print(f"JSONDecodeError: {e}.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

return responses

def self_consistency(prompt, num_samples=5):

responses = generate_responses(prompt, num_samples=num_samples)

final_answers = []

for response in responses:

if response is not None:

final_answers.append(response["answer"])

if final_answers:

most_common = Counter(final_answers).most_common(1)[0][0] # Get most frequent answer

else:

most_common = "No valid answers found"

return most_common, responses

# Example Prompt

prompt = "A train leaves City A at 10:00 AM, traveling at 60 km/h toward City B, which is 180 km away. Another train leaves City B at 11:00 AM, traveling at 90 km/h toward City A. At what time will they meet?"

final_answer, all_responses = self_consistency(prompt)

print("All Responses:")

# Display each response

for index, response in enumerate(all_responses, start=1):

print(f"\nResponse {index}:")

for line in response.get("solution", []): # Iterate through solution steps

print(line)

print(f"Final Answer: {response.get('answer', 'No answer found')}")

# Display final answer

print("\nFinal Answer (Majority Vote):", final_answer)Tree of Thought (ToT) Prompting

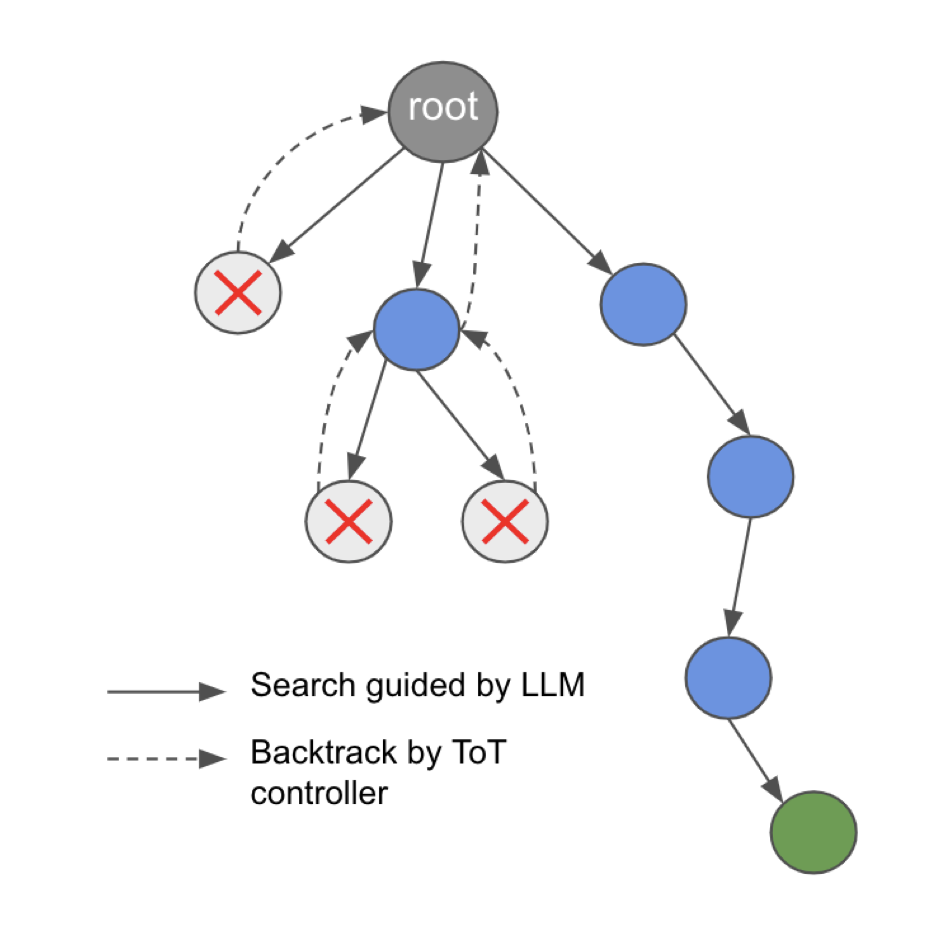

ToT is another advanced prompting technique that allows LLMs to perform deliberate decision making by considering multiple different reasoning paths and self-evaluating choices to decide the next course of action (Yao, et al., 2023). It is inspired by the human mind’s approach for solving complex reasoning tasks through trial and error. In this process, the human mind explores the solution space through a tree-like thought process (Long, 2023). ToT is ideal for complex, multi-step reasoning tasks where evaluating different paths before making a decision leads to the best or better outcomes.

In simple terms, the LLM is directed to evaluate each reasoning path by evaluating the choices that opens up for that path. This is done for every path. The drawing below (from Long, 2023) illustrates this:

Example code is available here.

Prompt Chaining

Prompt Chaining is using the output of the previous prompt as input to the next prompt. Each prompt in the chain can leverage all the techniques above.