We have previously through the process of recognizing numbers utilizing our artificial neural network (ANN). If you haven’t gone through that post on, you can do so now. However, we ran the recognition task on images from the MNIST dataset. Even though we used the test data, it’s still cannot be considered real-world. It’s clean, well-labeled, and structured, with a lot of the noise and ambiguity removed.

They often say GIGO: garbage in, garbage out. For best results, the input data needs to match the format and characteristics of the model and training data. It needs to be clean with noise or ambiguity removed. We do that via preprocessing. The preprocessing will usually be unique to your application. So in this post, we will go through the preprocessing images for recognition by our model.

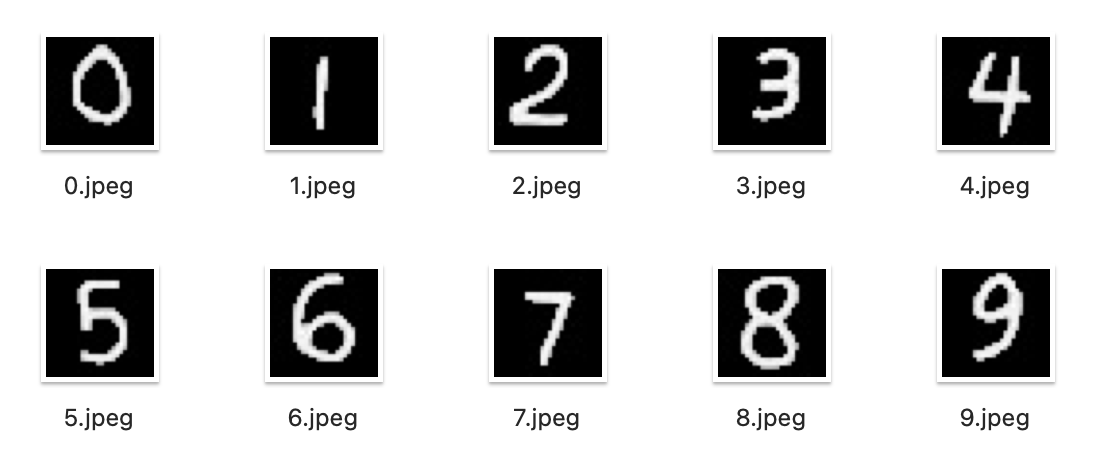

Here are the some raw images of number I wrote (pardon my handwriting). If we run the recognizer on this, we get abysmal results.

First, we install OpenCV. OpenCV is a library for computer vision tasks like image processing.

pip3 install opencv-python

Next, fire up your favorite editor and start by importing dependencies and define a preprocessing function.

import cv2

import numpy as np

import argparse

import os

def preprocess(image_path, output_path=None):

Next, we load the image and convert it to grayscale:

# Load the image

image = cv2.imread(image_path)

# Check if the image was loaded successfully

if image is None:

print(f"Error: Could not load image from {image_path}")

return

# Convert to grayscale

grayscale_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

Here are the grayscale images:

Next, we invert the images because our model prefers a dark background.

# Invert colors if necessary

average_pixel_value = np.mean(grayscale_image)

if average_pixel_value > 128: # Mostly bright; Adjust threshold as necessary

grayscale_image = cv2.bitwise_not(grayscale_image)

Here are the inverted images:

Next, we need to normalize and threshold the images.

# Normalize the image to make the highest value 255 (white)

min_val, max_val, _, _ = cv2.minMaxLoc(grayscale_image)

if max_val > min_val:

grayscale_image = (grayscale_image - min_val) / (max_val - min_val) * 255

grayscale_image = np.uint8(grayscale_image)

# Set lower pixel values to 0 (thresholding)

# Calculate the histogram of pixel values

hist = cv2.calcHist([image], [0], None, [256], [0, 256])

# Find the most common pixel value (background) and set to 0

threshold_value = np.argmax(hist) * 0.8

grayscale_image[grayscale_image < threshold_value] = 0

Here are the normalized images:

Next, we center the images. We do this by creating a binary mask and identifying the extents where the bright pixels are. This will be the bounds of the digit. We will then crop the grayscale image along these bounds and then position the cropped image into the center of a blank image with allocated borders.

# Threshold to create a binary image (255 for content, 0 for background)

_, binary_image = cv2.threshold(grayscale_image, 1, 255, cv2.THRESH_BINARY)

# # Find the bounding box of the non-blank regions

coords = cv2.findNonZero(binary_image) # Find all non-zero points (content)

x, y, w, h = cv2.boundingRect(coords) # Get the bounding box of content

# # Crop the image to the bounding box

cropped_image = grayscale_image[y:y+h, x:x+w]

# Create a blank canvas with optimal size and border

max_dim = max(w, h)

border_size = int(max_dim * 0.25) # 25% of the maximum dimension

canvas_size = max_dim + 2 * border_size

# Create a blank canvas with the calculated size

canvas = np.zeros((canvas_size, canvas_size), dtype=np.uint8)

# Calculate the position to center the cropped image on the canvas

y_offset = (canvas.shape[0] - h) // 2

x_offset = (canvas.shape[1] - w) // 2

# Place the cropped image onto the center of the canvas

canvas[y_offset:y_offset+h, x_offset:x_offset+w] = cropped_image

Here are the centered images:

Then, we resize and save the image

# Resize the canvas to 28x28

resized_image = cv2.resize(canvas, (28, 28), interpolation=cv2.INTER_AREA)

# Determine output path

if output_path is None:

output_path = image_path # Overwrite input image if no output path is given

# Save the result as a JPG

cv2.imwrite(output_path, resized_image)

print(f"Processed image saved as {output_path}")

Now, we just add code to retrieve the filename(s) as a command line parameter and run the preprocessing function on each file.

if __name__ == "__main__":

# Set up argument parser

parser = argparse.ArgumentParser(description="Preprocess image for recognition.")

parser.add_argument('input_images', nargs='+', type=str, help="Path(s) to the input image(s) (JPG format).")

parser.add_argument('--output_image', type=str, help="Path to save the output image.")

# Parse the arguments

args = parser.parse_args()

# Process input image

for input_image in args.input_images:

print(f"Processing {input_image}...")

preprocess(input_image, args.output_image)

Finally, save the preprocessor and run it. The preprocessor as well as the real-world samples are available on the GitHub repo.

python3 preprocess.py *.jpeg

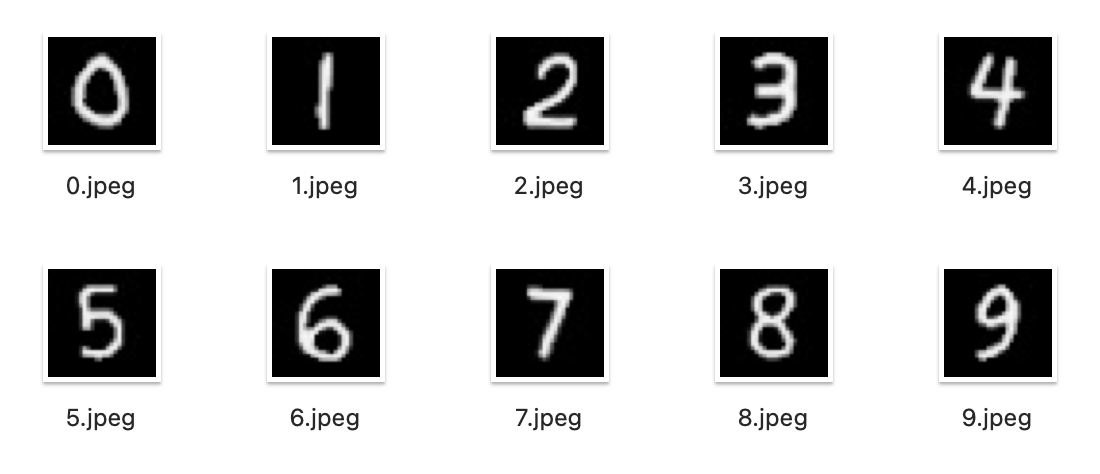

Running the recognizer on the images, we get:

Image: 0.jpeg

1/1 ???????????????????? 0s 65ms/step

Recognized Digit: 0

Image: 1.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 1

Image: 2.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 2

Image: 3.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 3

Image: 4.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 4

Image: 5.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 5

Image: 6.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 6

Image: 7.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 2

Image: 8.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 5

Image: 9.jpeg

1/1 ???????????????????? 0s 5ms/step

Recognized Digit: 9

After preprocessing, the model is recognizing better. 8 out of 10 or 80%. But the performance for real-world data is not good and it tells me that we probably need to improve our training, our model, or both. We will tackle this next.